To check let’s launch the Spark Shell by the following command: spark-shell We have successfully configured spark in standalone mode. bashrc file by the command source ~/.bashrc Make a softlink to the actual spark directory (This will be helpful for any version upgrade in future) ln -s spark-1.5.2-bin-hadoop2.4 spark Select the spark distribution as shown in the below snapshot:ĭownload Spark-1.6.1 from the shown link or use the following command to download spark wget ĭecompress the Spark file into /DeZyre directory tar –xvf spark-1.6.1-bin-hadoop2.4.tgz –C /DeZyre We would be configuring Spark to run in standalone mode, hence we would download prebuilt binary of Spark which is precompiled against Hadoop. Go to page.

INSTALL APACHE SPARK PYTHON MAC INSTALL

Now, we would install Spark on the machine. You can check it using the command java -version. This would install Java 8 in the machine. Sudo update-alternatives -set javac /DeZyre/jdk1.8.0_51/bin/javac Sudo update-alternatives -set jar /DeZyre/jdk1.8.0_51/bin/jar Sudo update-alternatives -install /usr/bin/jps jps /opt/jdk1.8.0_51/bin/jps 1 Sudo update-alternatives -install /usr/bin/javac javac /opt/jdk1.8.0_51/bin/javac 1 Sudo update-alternatives -install /usr/bin/java java /opt/jdk1.8.0_51/bin/java 1

INSTALL APACHE SPARK PYTHON MAC UPDATE

Update the available files in your default java alternatives so that java 8 is referenced for all application sudo update-alternatives -install /usr/bin/jar jar /opt/jdk1.8.0_51/bin/jar 1 Decompress the downloaded tarball of java jdkĥ.

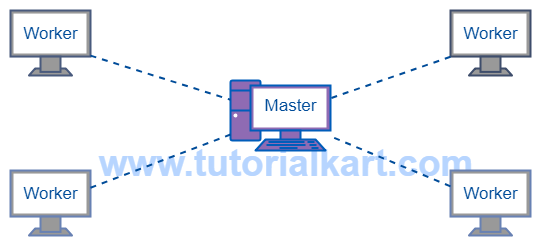

Wget -no-cookies -no-check-certificate -header "Cookie: gpw_e24=http%3A%2F% oraclelicense=accept-securebackup-cookie"" Download java jdk(This tutorial uses Java 8 however Java 7 is also compatible ).This tutorial has used “ /DeZyre” directory Change to the directory where you wish to install java.Note: This tutorial uses an Ubuntu box to install spark and run the application. Let’s install java before we configure spark. Java should be pre-installed on the machines on which we have to run Spark job. Standalone mode is good to go for a developing applications in spark. Driver runs inside an application master process which is managed by YARN on the cluster.Both driver and worker nodes runs on the same machine. Simplest way to deploy Spark on a private cluster.Along with that it can be configured in local mode and standalone mode. Spark can be configured with multiple cluster managers like YARN, Mesos etc. This tutorial presents a step-by-step guide to install Apache Spark.

0 kommentar(er)

0 kommentar(er)